ABOUT US

What we do

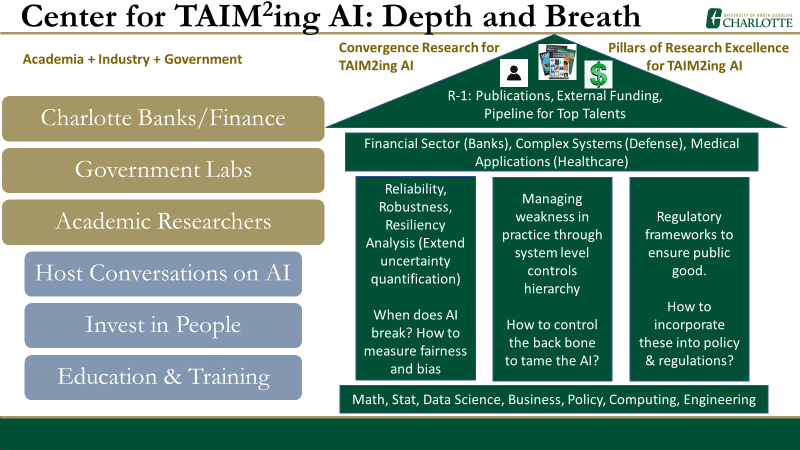

The Center for Trustworthy AI Through Model Risk Management (TAIM) seeks to provide a framework to ensure that the use of artificial intelligence (AI) and AI-based systems is safe, controlled, and managed in all applications. In doing so, the Center will become an international research hub in the emerging area of identification and management of risks associated with the adoption of algorithms.

In order to build this frame work, the members of the Center will focus on understanding the weaknesses and reliability of AI through both mathematical AND policy formulations. Our research will focus on three core areas:

Uncertainty Analysis for Artificial Intelligence Methods

Controls Hierarchy for Artificial Intelligence

Regulatory Framework for Artificial Intelligence

who we are

Research like this does not take place in a bubble. Since AI touches almost every aspect of our lives, it is only prudent to assemble a multi-disciplinary team from a wide variety fields in the academic, industrial, and governmental sectors to work together to build the framework that is at the heart of the Center’s work.

Our founding team contains a substantial amount of diversity of experience, with experience in fields as wide-ranging as mathematics to data science to business to government policy to philosophy.

Center Leadership

Director

Center Advisory Team

Affiliated Faculty

UNC Charlotte Team

Business, Industry and Government Partners

Institutional Partners

Postdocs

Center Staff

VISION & MISSION

VISION:

Ensure the application of AI is safe, controlled and managed in all applications.

MISSION:

Extend the understanding on the weakness and reliability of AI through mathematical and policy formulations.

- Extend uncertainty analysis for AI methods

- Hierarchy of controls for AI

- Regulatory framework for AI